Table of contents

Artificial intelligence (AI) became very trendy in the last half a year. With the introduction of ChatGPT, OpenAI took the spotlight in the AI space. This wonders nobody, as apart from offering this useful tool to normal folks, OpenAI offers many AI models to developers through their API. This means developers can utilize them to build any AI tool imaginable without needing to train their own AI model.

The introduction of self-hosted Deno Functions during the Supabase Launch Week 7 got me thinking of how easy it now became to build that kind of AI application. In this article, we will take a look at how to host your own Edge Functions and build an endpoint to communicate with GPT-3 or GPT-4 using this new Supabase feature and some OpenAI magic.

Setup

Sign up for a fly.io account and install flyctl.

brew install flyctlClone the demo repository to your machine.

git clone https://github.com/supabase/self-hosted-edge-functions-demo.gitSign in to your fly.io account.

flyctl auth login

Functionality

Now we are all set to add functionality to our application. If you have existing Edge Functions, you can drop them into the ./functions folder. If not, no worries, we will go through the process of writing such a function in the next part.

Our function will consist of 3 steps:

Receiving a text

Prompting it to OpenAI

Returning a summary of the text

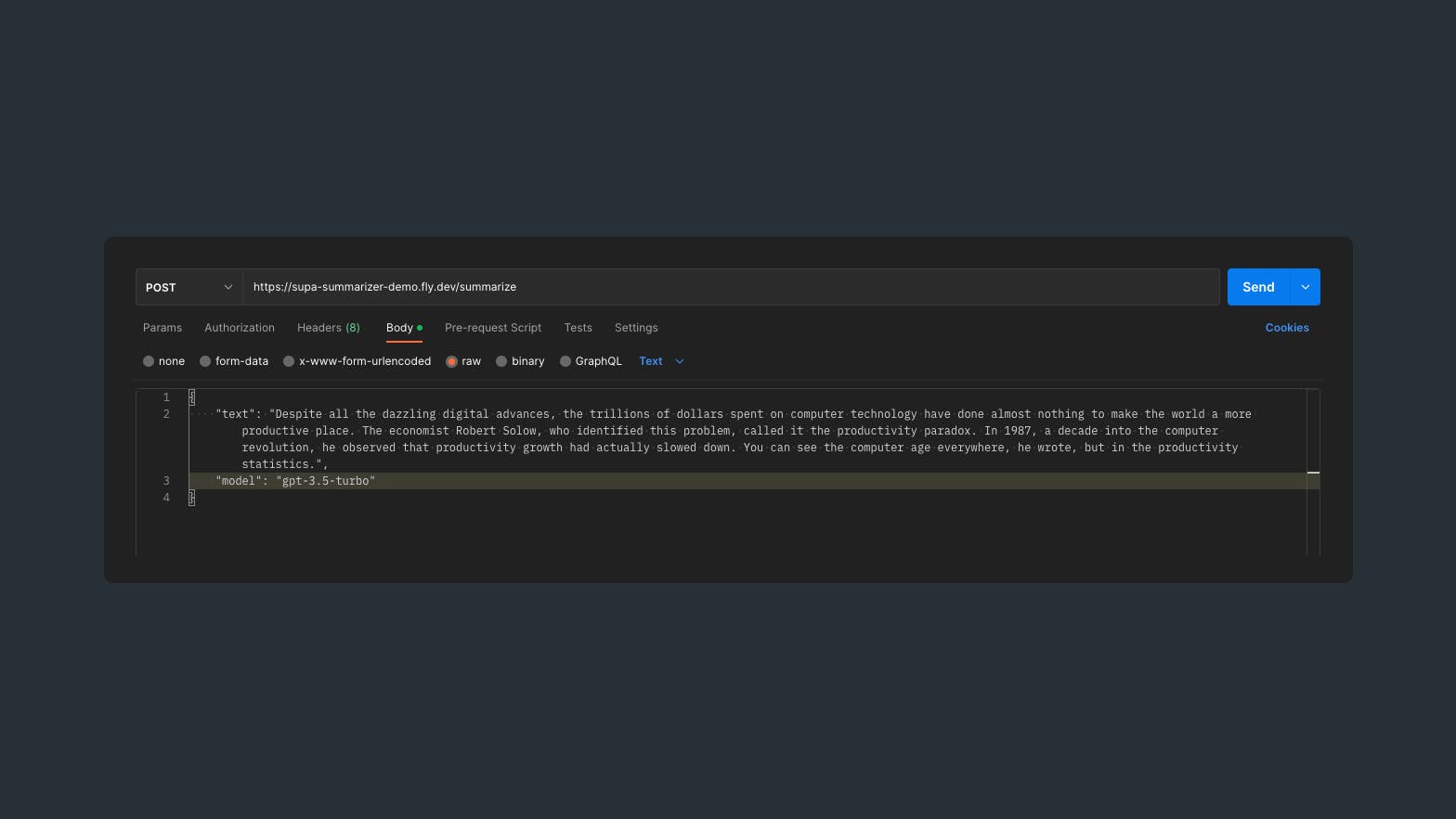

Let's create a new file ./functions/summarize/index.ts, where we will handle requests to our endpoint. Our endpoint results out of the combination of our project name and this path (in my case https://supa-summarizer-demo.fly.dev/summarize).

interface reqPayload {

text: string;

model: "gpt-3.5-turbo" | "gpt-4";

}

First, we declare an interface for our payload, which is the text (text) and the GPT version we want to use (model).

import { OpenAI } from "https://deno.land/x/openai/mod.ts";

/* ... */

const openAI = new OpenAI("OPENAI_API_KEY")

Next, we import the OpenAI library, which makes it much more convenient to interact with the API. As you can see from the import, Supabase Edge Functions utilize Deno which caches packages directly, so you don't need to install huge NPM packages.

import { serve } from "https://deno.land/std@0.131.0/http/server.ts"

/* ... */

serve(

async (req: Request) => {

const { text, model }: reqPayload = await req.json();

const prompt = `Summarize the following text using maximum of 300 characters: ${text}`;

const response = await openAI.createChatCompletion({

model,

messages: [{ role: "user", content: prompt }],

});

return new Response(JSON.stringify(response), {

headers: { "Content-Type": "application/json",

Connection: "keep-alive" },

});

},

{ port: 9005 }

);

Here we are utilizing the serve() function to handle our request. First, we destructure our request to get the text which we pass into our prompt. Then we pass our model and prompt into the createChatCompletion() function from the OpenAI library. Last but not least, we return the response, which will include a summary of our text.

Now the last thing to do is to launch our application. Use the command below in your terminal. You will be asked a bunch of questions, we will answer yes to copying our configuration. We will choose the name of our project, in my case it's supa-summarizer-demo. We don't need any databases for this project, and we want to launch it now.

fly launch

That's it! The application is ready to go. Now let's try it out with Postman.

Summarize

We will be using an excerpt from a New York Times article called “It’s Not the End of Work. It’s the End of Boring Work.” about AI, a very suitable topic for our application. (You can try summarizing this article if you want, haha.)

Despite all the dazzling digital advances, the trillions of dollars spent on computer technology have done almost nothing to make the world a more productive place. The economist Robert Solow, who identified this problem, called it the productivity paradox. In 1987, a decade into the computer revolution, he observed that productivity growth had actually slowed down. You can see the computer age everywhere, he wrote, but in the productivity statistics.

We pass it into our request as text and we select gpt-3.5-turbo as our model because the fragment is very short, otherwise we could have used gpt-4 for better results.

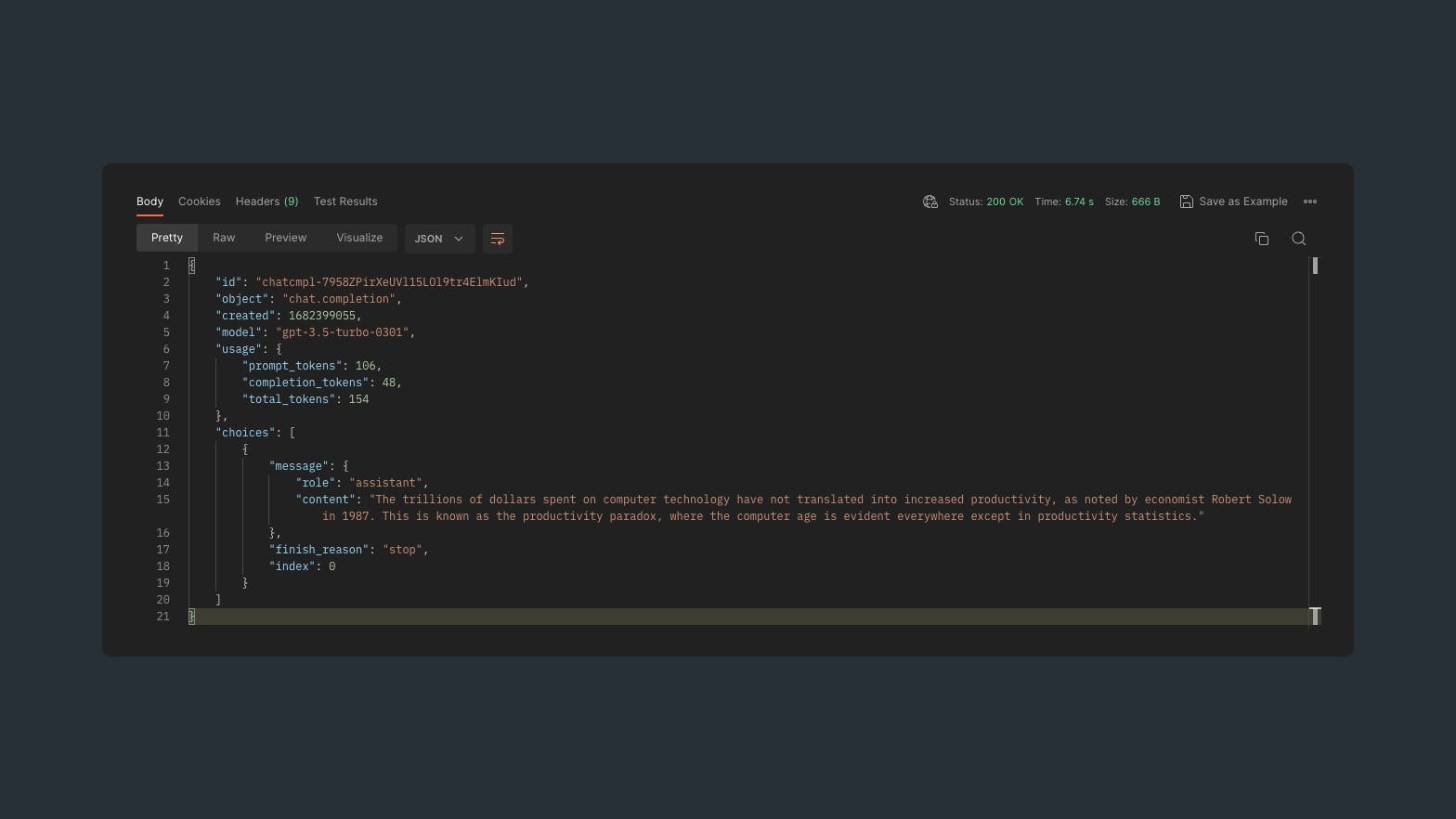

In return, we get the following summary.

The trillions of dollars spent on computer technology have not translated into increased productivity, as noted by economist Robert Solow in 1987. This is known as the productivity paradox, where the computer age is evident everywhere except in productivity statistics.

That's it! Now you can easily use GPT-4 without paying for the ChatGPT Plus subscription instead you pay for what you use. I will leave the app running until the end of April and cap it at $5. Feel free to use it if you want to try it out, but I encourage you to build your own implementation.

If you want to learn more about Supabase Edge Functions, check out this blog post written by Supabase Team.

Thanks for reading! ❤️ This article is part of the Content Storm which celebrates Supabase Launch Week 7. If you want to be the first one to see my next article, follow me on Hashnode and Twitter!